In the year 2000, just about half of all American adults were online. Today, nine-in-ten adults use the internet in the United States, according to Pew Research Center.

And in 2020, Americans, along with the rest of the world, are spending even more time online. People are spending 45% more time on social media since March of 2020 globally, with a 17% increase in the U.S., according to

Statista.

Unfortunately, as our time spent online has increased, so has the chance that we may come across abusive content on the platforms where we should all feel safe.

Because we are not a direct-service organization, Thorn is not able to field reports of child sexual abuse material (CSAM) or child sex trafficking. But since we

build software and tools aimed at detecting, removing, and reporting abuse content, we can help to point you in the right direction should you ever inadvertently come across harmful content.

Reporting this content through the right channels as a community helps to keep platforms safe, and could lead to the identification of a victim or help to end the cycle of abuse for survivors.

Here’s what to do if you find CSAM or evidence of child sex trafficking online.

Child sexual abuse material and child sex trafficking

First we need to talk about what child sexual abuse material (CSAM) is, and how it’s different from child sex trafficking.

Child sexual abuse material (legally known as child pornography) refers to any content that depicts sexually explicit activities involving a child. Visual depictions include photographs, videos, digital or computer generated images indistinguishable from an actual minor. To learn more about CSAM and why it’s a pressing issue,

click here.

As defined by the

Department of Justice, child sex trafficking “refers to the recruitment, harboring, transportation, provision, obtaining, patronizing, or soliciting of a minor for the purpose of a commercial sex act.” To learn more,

click here.

Now let’s look at how to report this type of content should you ever come across it online:

1. Never share content, even in an attempt to make a report

It can be shocking and overwhelming if you see content that appears to be CSAM or related to child sex trafficking, and your protective instincts might be kicked into high gear. Please know that you are doing the right thing by wanting to report this content, but it’s critical that you do so through the right channels.

Never share abuse content, even in an attempt to report it. Social media can be a powerful tool to create change in the right context, but keep in mind that every instance of CSAM, no matter where it’s found or what type of content it is, is a documentation of abuse committed against a child. When that content is shared, even with good intentions, it spreads that abuse and creates a cycle of trauma for victims and survivors that is more difficult to stop with every share.

It’s also

against federal law to share or possess CSAM of any kind, which is legally defined as “any visual depiction of sexually explicit conduct involving a minor (someone under 18 years of age).” State age of consent laws do not apply under this law, meaning federally a minor is defined as anyone under the age of 18.

The same goes if you think you’ve found illegal ads promoting the commercial sexual exploitation of children (CSEC), such as child sex trafficking. Sharing this content publicly may unwittingly extend the cycle of abuse. Instead, be sure to report content via the proper channels as outlined below.

2. Report it to the platform where you found it

The most popular platforms usually have guides for reporting content. Here are some of the most important to know:

But let’s take a moment to look at reporting content to Facebook and Twitter.

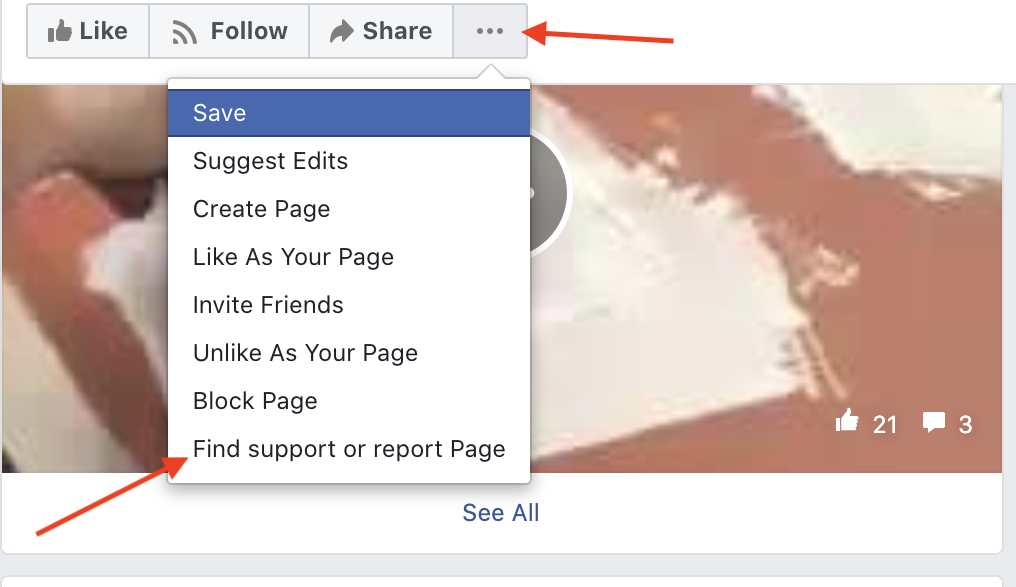

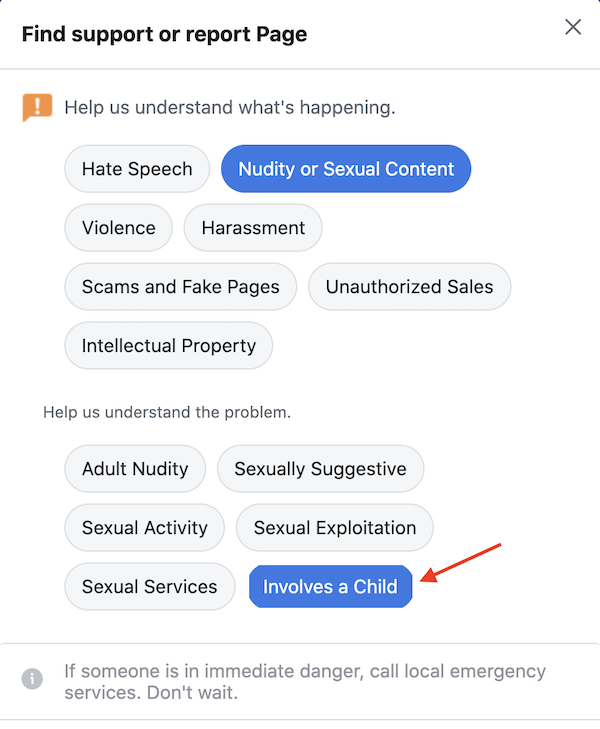

Reporting content to Facebook

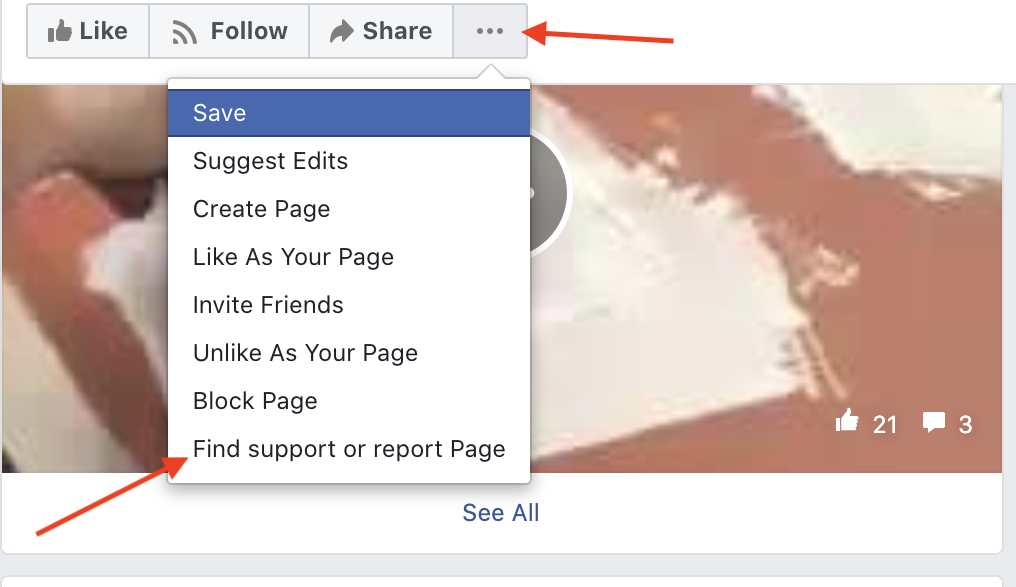

Whether you want to report a page, post, or profile to Facebook, look for the three dots to the right of the content, click on them, and then click on Find support or report Page.

From there you will be guided through the process and will get a confirmation that your report has been received. Be sure to select Involves a Child when making your report.

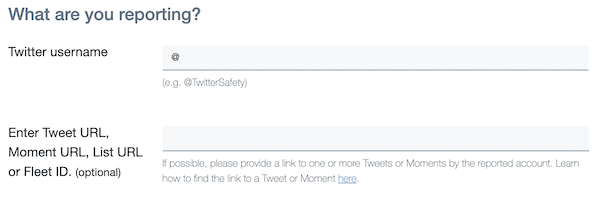

Reporting content on Twitter:

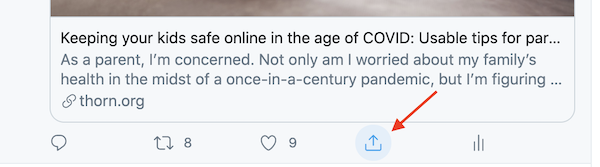

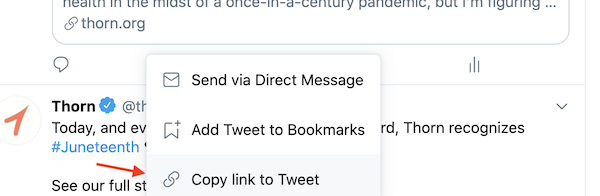

While you can report a tweet for violating Twitter’s policies in a similar way to Facebook content (clicking the

button to report a tweet), if you are reporting child exploitation content on Twitter, there’s a separate process that ensures reports of CSAM or other exploitative content are given priority.

First,

click here to see what content violates Twitter’s child exploitation policies. Then

fill out this form with the appropriate information, including the username of the profile that posted the content, and a link to the content in question.

To find the direct link to a tweet, click the share button at the bottom of the tweet and select Copy link to Tweet.

For any other platforms, you should always be able to easily find a way to report abuse content with a quick online search. For example, search for: Report abusive content [platform name].

The National Center for Missing and Exploited Children also offers overviews for reporting abusive content for multiple platforms

here.

3. Report it to CyberTipline

The

National Center for Missing and Exploited Children (NCMEC) is the clearinghouse for all reports of online child sexual exploitation in the United States. That means they are the only organization in the U.S. that can legally field reports of online child sexual exploitation. If NCMEC determines it to be a valid report of CSAM or CSEC, they will connect with the appropriate agencies for investigation.

This is a critical step in addressing the sexual exploitation of children online. Be sure to fill out as much detail as you’re able.

4. Report CSEC to the National Human Trafficking Hotline

If you find evidence of child sex trafficking, call the National Human Trafficking Hotline at 1-888-373-7888.

Managed by

Polaris, the hotline offers 24/7 support, as well as a live chat and email option. You can also text BEFREE (233733) to discreetly connect with resources and services.

5. Get your content removed and connect with resources

If you have been the victim of explicit content being shared without consent, the Cyber Civil Rights Initiative

put together a guide for requesting content to be removed from most popular platforms.

If you have been the victim of sextortion—a perpetrator using suggestive or explicit images as leverage to coerce you into producing abuse content—take a look at our

Stop Sextortion site for more information and tips on what to do.

6. Practice wellness

Close the computer. Take a deep breath. Go for a walk.

This is an extremely difficult issue, and if you’ve just gone through the steps above, it means you’ve recently encountered traumatic material.

But you’ve also just taken a first step in what could ultimately be the rescue of a child or the cessation of a cycle of abuse for survivors.

Practice the things that create balance and support in your life, and if you need to, connect with additional resources. Text the

Crisis Text Line to connect discreetly with trained counselors 24/7.

Or maybe you’re left with the feeling that there’s more work to be done. Learn more about

local organizations working in this space and see if they offer volunteer opportunities. Fundraising for your favorite organizations can also make a huge difference.

If you’re here, whether you’re making a report or just equipping yourself with knowledge should you ever need it, you’re joining a collective movement to create a better world for kids. Know that you are part of a united force for good, one that won’t stop until every child can simply be a kid.